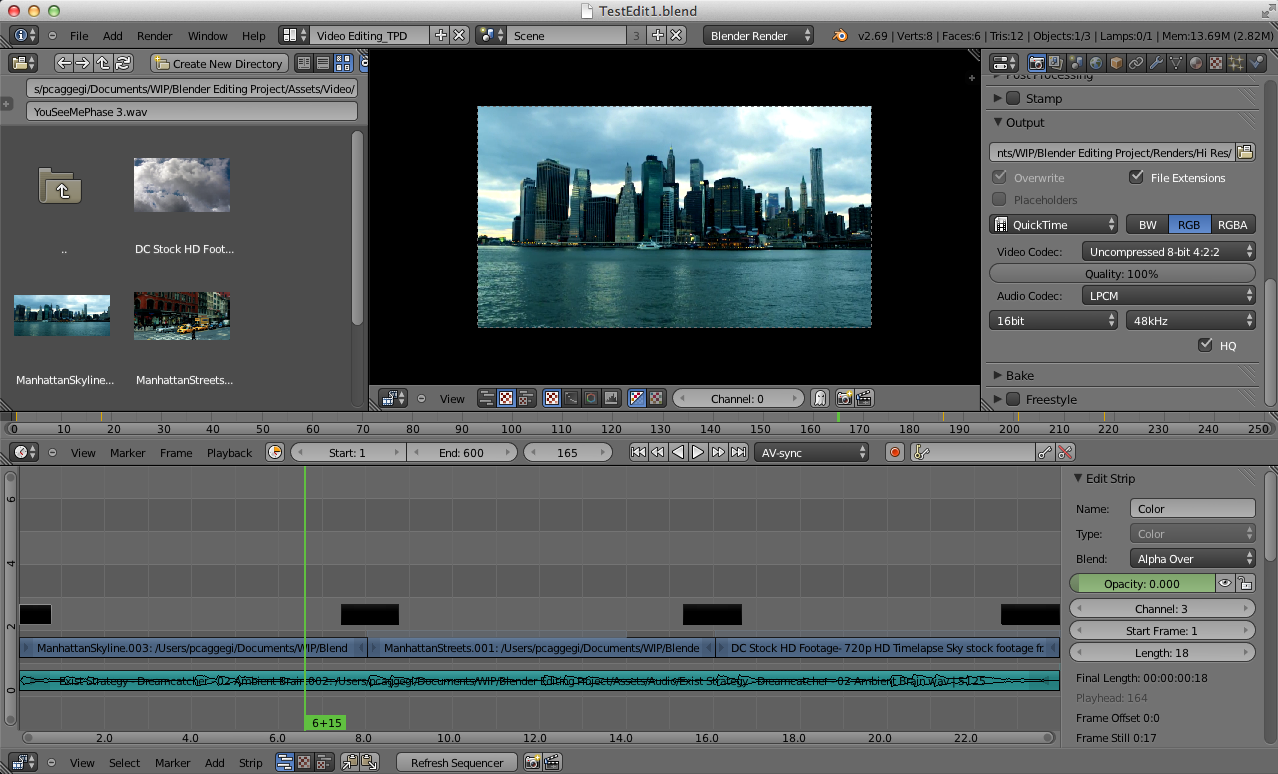

- BLENDER VIDEO EDITING TEXT HOW TO

- BLENDER VIDEO EDITING TEXT MOVIE

- BLENDER VIDEO EDITING TEXT INSTALL

- BLENDER VIDEO EDITING TEXT CODE

The models can only be used for non-commercial purposes.Video to video: bagel.mp4 Burger4.mp4 Img2img: 3160-3714.mp4 Painting Hammershoi.mp4 Restrictions for using the AI models: The created mask can then be added to the VSE as a strip, and converted to video with the above add-on: For this purpose, this add-on can be used:įor creating a mask on top of a clip in the Sequencer, this add-on can be used to input the clip as background in the Blender Image Editor.

BLENDER VIDEO EDITING TEXT MOVIE

Since the Generative AI add-on only can input image or movie strips, you'll need to convert other strip types to movie-strip. Here you can locate and delete the individual models. On Windows: %userprofile%\.cache\huggingface\hub On Linux and macOS: ~/.cache/huggingface/hub By default, the cache directory is located at: Hugging Face diffusers model is downloaded from the hub and saved to a local cache directory. And img2vid with Zeroscope XL in 768x384x10 or 640x320x17. On 6 GB of VRAM I typically render images first with 1024x512 images with SDXL. Or: Voice of God judging mankind, woman talking about celestial beings, hammer on wood. Try prompts like: Bag pipes playing a funeral dirge, punk rock band playing hardcore song, techno dj playing deep bass house music, and acid house loop with jazz. If the audio breaks up, try processing longer sentences. MAN/WOMAN: for bias towards the speaker.

However in the add-on preferences this behaviour can be switched off. When doing this, the file name, and if found the seed value, are automatically inserted into the prompt and seed value. If you get the message that CUDA is out of memory, then restart Blender to free up memory and make it stable again. If the image of your playback stutters, then select a strip > Menu > Strip > Movie Strip > Set Render Size. If the image of your renders breaks, then use the resolution from the Model Card in the Preferences. The Stable Diffusion models for generating images have been used a lot, so there are plenty of prompt suggestions out there if you google for them. The Animov models have been trained on Anime material, so adding "anime" to the prompt is necessary, especially for the Animov-512x model. See SDXL handling most of the styles here: Updates Video Sequence Editor > Sidebar > Generative AI:

BLENDER VIDEO EDITING TEXT INSTALL

Install Dependencies, set Movie Model Card, and set Sound Notification in the add-on preferences: If any Python modules are missing, use this add-on to manually install them:

BLENDER VIDEO EDITING TEXT HOW TO

If it says: "ModuleNotFoundError: Refer to for more information on how to install xformers", then try to restart Blender. The first time any model is executed many GB will have to be downloaded, so go grab lots of coffee. Open the add-on UI in the Sequencer > Sidebar > Generative AI. Then it writes that it is finished(if any vital errors, let me know). Note that you can change what model cards are used in the various modes here(video, image, and audio). In the Generative AI add-on preferences, hit the "Install Dependencies" button. Install the add-on as usual: Preferences > Add-ons > Install > select file > enable the add-on. On Windows, right-click on the Blender icon and "Run Blender as Administrator"(or you'll get write permission errors). (As for Linux, if anything differs in installation, then please share instructions.)įirst you must download and install git for your platform(must be on PATH(or Bark will fail)):

BLENDER VIDEO EDITING TEXT CODE

0 kommentar(er)

0 kommentar(er)